Let's dive together into the world of web content caching with Nginx. Starting with basics we will quickly move to more advanced topics and see some real world examples. If you can make it to the end of this blog post... You will bear the title of a "Caching King".

Introduction

Let me start with an inspiring story.

A friend of mine took a contract for creating a simple web application. This app was used during a crowdfunding event, to visualize live statistics about the raised money for a children hospital. People donated money and the total amount with other details were displayed on a big screen. Additionally this service was available online. The application was very simple and the server strong. Luckily the generosity and love were much bigger. The server started to slow down. My friend got in panic but he found a clever and easy solution - he used Nginx’s caching capabilities.

Today I will show you how to make the internet faster and more stable. How you can easily adapt Nginx’s caching, to boost your applications.

We will start with caching concepts and then jump into available Nginx caching configuration - directives. I will explain why they are useful and how you can profit from them. Armored with the right knowledge we will fight our way through a real world example.

The Caching Concept

Before we start I need to make an a priori assumption. Namely that the content is quasi real time. This means that our data or if you wish our HTTP responses, do not change so often. Please note that the phrase "not so often" is not a strict definition. It can be 1 second, 1 hour, 1 week etc.

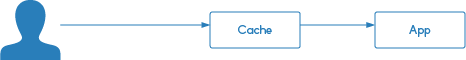

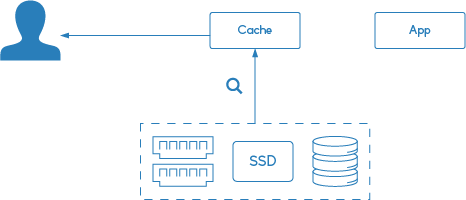

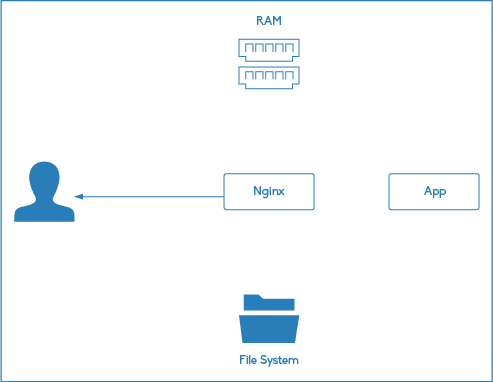

Ok, having this in mind let’s start with our initial situation, with no caching involved, which looks like this.

The user does a request to the application and gets a response back.

Adding caching to this scenario is a simple way to improve performance, capacity and availability. It works by saving responses from servers or applications to local storage or memory.

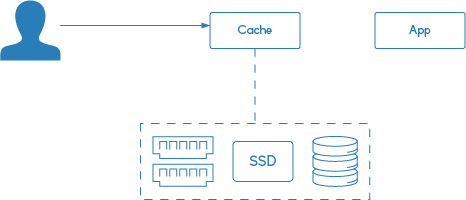

Let’s consider this simple situation. The client does a request to the application like in the previous example but this time he goes through the cache.

The application responses and the cache forwards this response to the client but it also saves it locally.

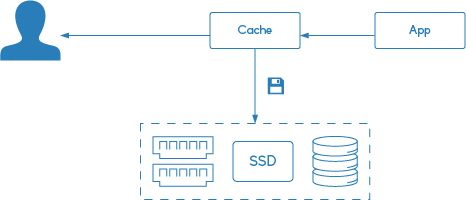

Next time the user does the same request the cache checks if it already has this information.

And if that’s the case, it responds immediately to the user, by serving this cached content.

Please note that the second request doesn’t involve the application at all.

Caching adds complexity to the system but it comes with great benefits:

- it improves site performance - requests doesn’t have to go through the whole rendering process

- it increases capacity - by reducing load on origin servers

- it also gives greater availability - by serving stale content when the origin server is down

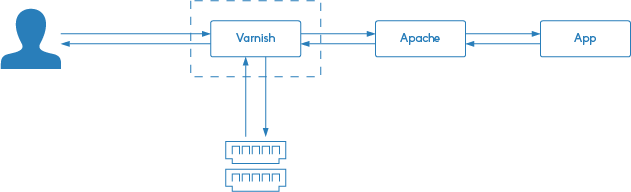

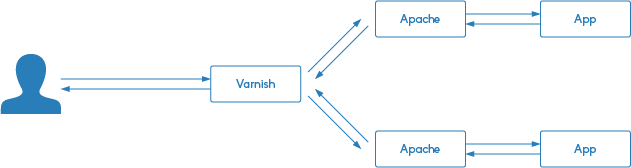

Before we start with Nginx, I want to mention that there are also alternatives for caching content. Squid Cache and Varnish Cache are my favourite examples. The standard use case which I saw during my career looks like this:

You simply put the cache in front of the HTTP server. Either on the application server or on a specially dedicated machine:

This obviously adds even more complexity to the system, but it might be a better fit to your case. Read, compare features and decide.

In this post we will focus our attention only on Nginx.

Caching with Nginx

Nginx is a HTTP server and it’s brilliant for serving static files and proxying requests. Because of its asynchronous nature it stands out with a light-weight resource utilization.

When it comes to caching, Nginx has integrations for:

- HTTP servers

- FastCGI

- uwsgi

- SCGI

Now that we know how caching works and what Nginx is. Let’s look at Nginx’s implementation of caching.

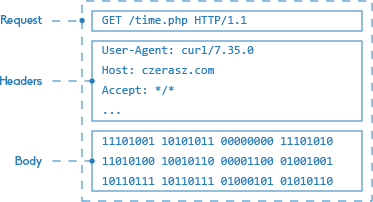

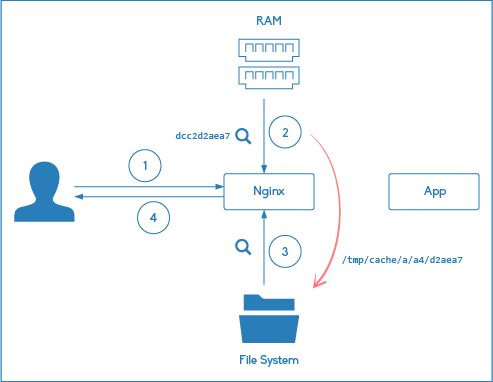

An example should make it clear. First the client does a request.

A sample HTTP request is presented on the diagram below:

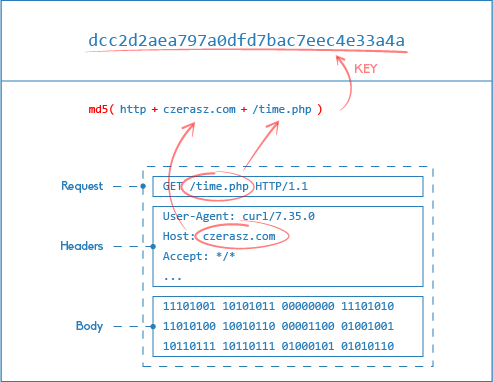

Based on some details from it Nginx generates a hash key.

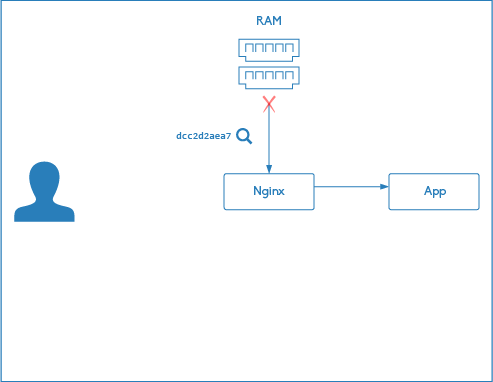

Now Nginx checks if this hash key already exists in memory. If not, the request goes to the application.

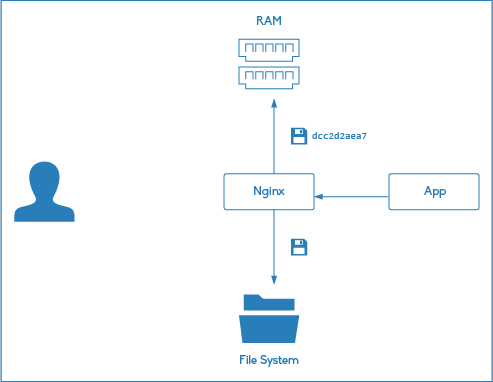

The application answers and its response is saved to the file system.

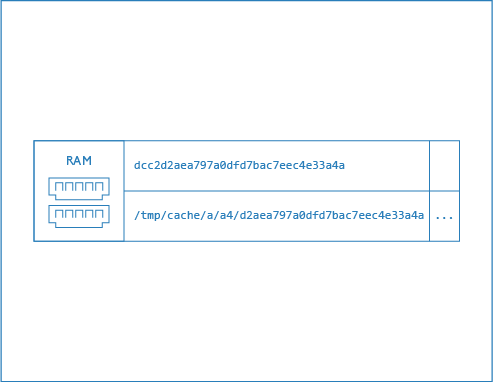

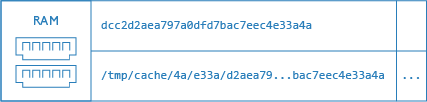

Additionally the hash key, generated earlier, is saved into memory. To make it easier to understand I visualise the hash key value together with the location of the saved file. But remember that Nginx stores in memory only the hash key.

Finally the user gets the response back.

When our client requests the same url a second time, Nginx again generates the hash key and checks if it exists in the memory. This time it’s there, so Nginx serves the cached file from the file system which is associated with the hash key.

Please note that the second request doesn’t involve the application at all.

For the second request clients don’t wait for the application to first fetch data from the database and then render the page. Instead Nginx serves a static file with a cached version of the response.

Additionally those files are most probably cached in memory. This time not by Nginx (although it gives the OS some hints) but by the operating system. It’s a Linux’s attribute to use the resources as efficient as possible. This makes reading files from the file system extremely fast.

Configuration - Available Directives

Now that we know the technical background, of how Nginx’s cache works, let’s see how we can configure it.

First on the http level we define where the data should be stored. We specify the path on the file system and the memory zone and their size. The memory zone stores only meta information on cached items - hash keys.

Note

Each item takes about0.125 kBof memory so we can store a lot of them. In1MBfor example Nginx can store about8000cache keys.

Basics

The basic cache definition looks like this:

proxy_cache_path /data/nginx/cache keys_zone=zone_name_one:10m;To enable it we just use the proxy_cache directive.

proxy_cache zone_name_one;This video will show you the basics of Nginx caching.

The Github project mentioned in the video can be found here.

It’s also possible to limit the size of the file system used to store cached content, simply by adding the max_size parameter.

proxy_cache_path /data/nginx/cache keys_zone=one:10m max_size=200m;Then we have also the option to say when files should be removed (from the cache regardless of their freshness) when they are not used for a specific amount of time.

proxy_cache_path /data/nginx/cache keys_zone=one:10m inactive=60m;And there is also a nice little parameter which let’s us define the hierarchy levels of a cache.

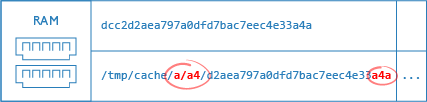

proxy_cache_path /data/nginx/cache levels=1:2 keys_zone=one:10m;The image below illustrates the hierarchy levels configuration:

Now let’s see if you understand it correctly. Ready for a quiz? Based on the illustration below, what should the levels parameter be?

The answer is 2:4, which is more clear if we look at the following image:

What/When to cache?

By default Nginx caches only GET and HEAD requests. You can change this with the proxy_cache_methods directive:

proxy_cache_methods GET HEAD POST;You can also instruct Nginx to cache the response only after it was requested at least 5 times.

proxy_cache_min_uses 5;This would be useful in a situation where you have a lot of content, but you would only like to cache only those requests which are really popular.

Nginx can save us upstream bandwidth and disk writes as well. By respecting cache headers and 304, not modified responses Nginx will not download the content again if the following directive is turned on:

proxy_cache_revalidate on;How to cache?

With Nginx we are not limited to cache everything by the same rule. Instead we can tell Nginx which information should be used to generate the hash key. And we can do it on the http, server or location level. Here are two examples:

proxy_cache_key "$host$request_uri$cookie_user";proxy_cache_key "$scheme$proxy_host$uri$is_args$args";Tip

How to generate a Nginx cache hash key? Use this command:echo -n ‘httpczerasz.com/time.php’ | md5sum

We can also tell Nginx, under which conditions the request shouldn’t be stored in the cache.

proxy_no_cache $http_pragma $http_authorization $cookie_nocache $arg_nocache;How long to cache?

There is a simple directive which tells Nginx how long to cache the responses of a certain type:

proxy_cache_valid any 1m;

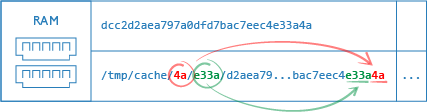

proxy_cache_valid 200 302 10m;But mainly the headers of the origin server define the cacheability of the content:

- Expires

- Cache-Control

- X-Accel-Expires - Nginx special header.

Overrides other headers. Used when you need to serve different headers to the client.

The priority of the mentioned options are presented on the chart below, with the strongest priority on top:

Other

The following option adds a lot to the availability factor. It allows Nginx to serve stale (old, expired) content when the application response timed out or returned a 50x status code:

proxy_cache_use_stale error timeout;Yet another cool feature is the ability to let only the first request through to the application. This can be enabled with:

proxy_cache_lock on;You already know, that the returned content by the origin is streamed to disk. By default to a location defined in the proxy_cache_path directive. You could also store those files in a temporary directory before they are moved to the cache path. If you need this behaviour use this:

proxy_temp_path /tmp/custom_cache/;This directive works good with multiple caches. But remember that it will be always less efficient than if the temp path is the same as the cache path

Debugging Nginx's Cache

I believe that debugging any software the right way is even more important than actually knowing the software itself. A good debugging process guarantees a better understanding and most important, solving challenges really fast.

In this chapter I will show you few tricks to easily debug Nginx’s caching wold.

The first trick is about bypassing the cache.

proxy_cache_bypass $arg_nocache $cookie_nocache $arg_comment;The directive above allows you to specify when to omit the cache. This means that each request with a nocache=true query parameter goes to the origin. It might even be that Nginx will cache the result as well.

Another trick is adding a header with cache status information. You can do it simply by adding a header:

add_header X-Cache-Status $upstream_cache_status;Or in a more sophisticated way presented below, which allows only local requests to view the header:

map $remote_addr $cache_status {

127.0.0.1 $upstream_cache_status;

default "";

}

...

add_header X-Cache-Status $cache_status;By debugging the X-Cache-Status header you will appreciate the following table:

| MISS | Object was not found in the cache. Response was served from the origin. Response may have been saved to cache. |

| BYPASS | Got response from upstream. Response may have been saved to cache. |

| EXPIRED | Cached object has expired. Response was served from the upstream. |

| STALE | Object served from cache because of issues with origin server response |

| UPDATING | Serve stale content from cache because proxy_cache_lock has timed out and proxy_use_stale takes controll |

| REVALIDATED | proxy_cache_revalidate verified that the current cached content was still valid |

| HIT | The object was found in the cache and it is served from there |

In the following two chapters we will learn which processes are involved in the maintenance of cached files. This chapters are really short and right after we will jump into cool examples. So stay with me!

Cache Loader

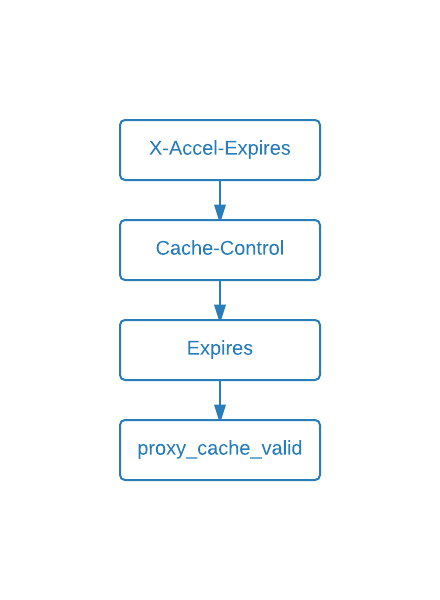

The Nginx cache loader is a process responsible for loading cache from disk.

It is run only once (on startup) and loads the metadata into the memory zone. It runs in iterations until all keys are loaded.

We can tune it’s behaviour (to utilize CPU the right way) with the following options:

loader_threshold- how long is one iteration timeloader_files- don’t load more items thanloader_sleeps- pause time between iterations

An example is presented below:

proxy_cache_path /data/nginx/cache keys_zone=one:10m [loader_files=number] [loader_sleep=time] [loader_threshold=time];The comic below shows that the cache loader is used only on startup and that it works in iterations defined by the described parameters.

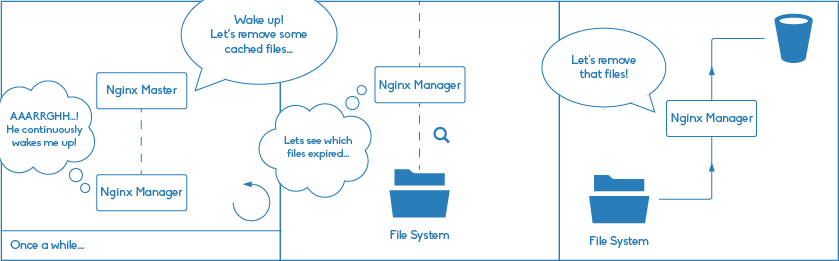

Cache Manager

The Nginx cache manager is a process which purges the cache over time.

It periodically checks file storage and removes least recently used data if the file size exceeds max_size. It also removes files which were not used independently of the cache settings.

Watch the comic below which illustrates cache manager duties.

Examples

In this chapter I will present two real world examples.

The first one is based on what we have learned so far. We will see how to purge content which was previously cached.

The second example is a little bit more advanced. I shows how to build a small CDN based on Nginx.

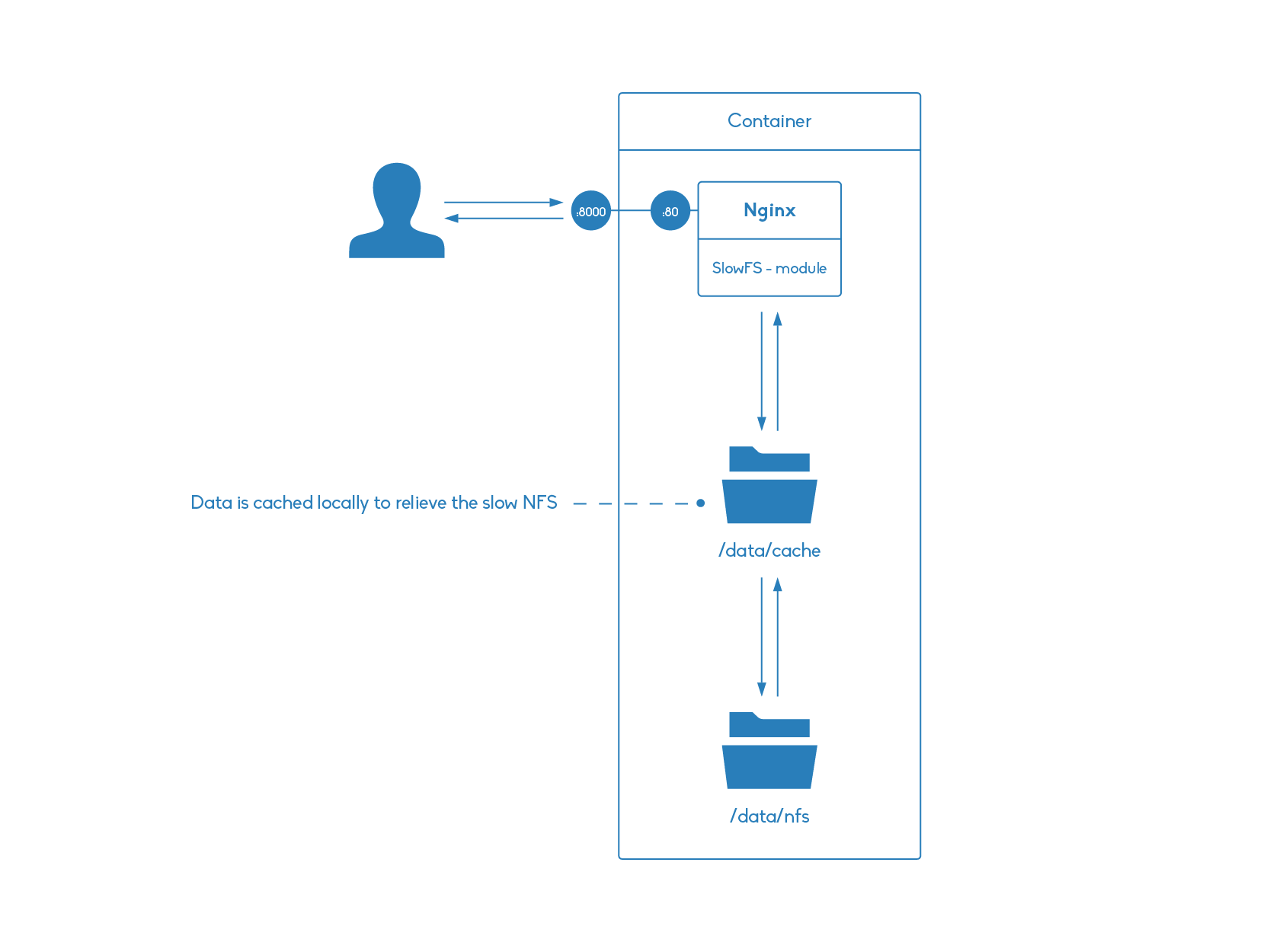

How to create a small CDN with Nginx?

This example describes how Nginx can help you create a simple, file based CDN with static content.

Let’s assume we have a distributed file system cluster. It can be based on NFS or GlusterFS or on anything you prefer. One thing those technologies have in common is that they are slow. Luckily Nginx together with the Slow FS module can overcome this weakness by caching files from a network file system on the local drive.

For this example I prepared a project on Github which you can find here. An overview is presented on the diagram below:

To get started simply clone the repository:

git clone git@github.com:czerasz/nginx-slowfs-example.gitOnce you have it go to the project’s directory and start the Docker container with:

docker-compose upThis might take a while because a Nginx compilation process takes place inside the container. To be able to use the slowfs feature we need to compile Nginx together with the Slow FS module. This is done in the Dockerfile. If you are interested how this is done check it out here.

Then request Nginx with curl:

$ curl -i 'localhost:8000/test-file.txt'

HTTP/1.1 200 OK

Server: nginx/1.6.2

Date: Mon, 23 Mar 2015 13:35:07 GMT

Content-Type: text/plain

Content-Length: 4

Last-Modified: Mon, 23 Mar 2015 12:48:15 GMT

Connection: keep-alive

Accept-Ranges: bytes

testWhat you see is (the content of) the file stored in the "mounted" nfs-dummy directory.

If this directory would be a busy NFS mount and the number of requests would be high, then the responses would be really slow.

But in our example the files are cached locally. You can inspect the cache directory with the following command:

$ docker exec nginxslowfs_nginx_1 tree -A /data/cache/

/data/cache/

└── 1

└── 27

└── 2bba799df783554d8402137ca199a271The Nginx configuration for our example is simple and consists of two parts. The cache definition:

# Configure slowfs module

slowfs_cache_path /data/cache levels=1:2 keys_zone=fastcache:10m;

slowfs_temp_path /data/temp 1 2;And the cache enabling section:

location / {

root /data/nfs;

slowfs_cache fastcache;

slowfs_cache_key $uri;

slowfs_cache_valid 1d;

index index.html index.htm;

}One nifty feature of the Slow FS module is cache purging. It can be enabled with the following configuration block:

location ~ /purge(/.*) {

allow 127.0.0.1;

deny all;

slowfs_cache_purge fastcache $1;

}This allows purging for internal requests

Let’s test it with curl from inside of the docker container:

$ docker exec nginxslowfs_nginx_1 curl -i 'localhost:80/purge/test-file.txt'

HTTP/1.1 200 OK

Server: nginx/1.6.2

Date: Tue, 24 Mar 2015 13:04:25 GMT

Content-Type: text/html

Content-Length: 263

Connection: keep-alive

<html>

<head><title>Successful purge</title></head>

<body bgcolor="white">

<center><h1>Successful purge</h1>

<br>Key : /test-file.txt

<br>Path: /data/cache/1/27/2bba799df783554d8402137ca199a271

</center>

<hr><center>nginx/1.6.2</center>

</body>

</html>Nginx says that it was successful but you can always double-check with the following command:

$ docker exec nginxslowfs_nginx_1 tree -A /data/cache/

/data/cache/

└── 1

└── 27Bonus

Now, because you came so far, I would like to honour your effort. I want to appoint you as the Cache King of Nginx’s land.

Please wear this crone with pride and swear to use cache wisely and spread its power all over the world.